We're back for another round of exploring game algorithms using the simple game

Color Walk. We finally reached the point of evaluating

Dijkstra's algorithm—the classic, efficient graph algorithm for finding shortest paths—in the last post. It performed pretty well against the top dogs: Greedy Look-Ahead (GLA) and the GLA-BFS hybrid, especially when it came to consistently finding the best moves. However, it failed to find the best moves when a board could be solved in under 29 moves, so we're going to see if we can squeeze out any more performance by modifying Dijkstra's algorithm further. To do that, we're going to try combining Dijkstra's algorithm with GLA, running Dijkstra's algorithm in more than one pass, and changing the heuristic we use to guide the search.

Dijkstra and an Assist

We saw in the last post that we couldn't use Dijkstra's algorithm in its purest form because that would still require searching the entire move graph to find the true shortest path from the source vertex (the start-of-game) to the sink vertex (the end-of-game). In fact, Dijkstra's algorithm, when run to completion, will find the shortest path from the source vertex to

every other vertex in the graph. Since the move graph is actually a tree, we don't care what the shortest path is to most of the vertices, save one, the end-of-game vertex. Because of that restriction, we restricted the algorithm to only search until the end-of-game vertex was found, and then try to balance the search heuristic so that we reached that vertex on as short of a path as possible.

This tactic of using a heuristic search and stopping once a goal is reached is actually a variant of Dijkstra's algorithm by another name, called

A* search. This algorithm is a popular way to do path finding in games where computer-controlled characters are moving around in a 2D or 3D space. The natural heuristic in that application is the straight-line distance from the current position of the character to the target position, and A* search is pretty effective at this task.

In using a heuristic for the Color Walk move graph search, we have given up the guarantee of finding the true shortest path because the heuristic is not perfect, but we gain a huge benefit in efficiency and tractability. Without the heuristic, the search would go on forever (or at least until it ran out of memory) in such a large graph. Even with the current performance of the algorithm, we want to try to tighten up the heuristic to find a shorter path, but to do that, we want to do something to shrink the size of the graph that it needs to search. To do that, we can add in our old friend GLA to make fast headway into the move graph before switching to Dijkstra's algorithm.

Adding this hybrid GLA-Dijkstra's algorithm is straightforward. We start with the normal task of adding the algorithm to the list of choices in the algorithm pull-down list and to the switch statement that lives behind the list:

function Solver() {

// ...

this.init = function() {

// ...

$('#solver_type').change(function () {

switch (this.value) {

// ...

case 'greedy-dijkstra':

that.solverType = that.dijkstraWithGla;

that.metric = areaCount;

break;

default:

that.solverType = that.roundRobin;

break;

}

// ...

});

// ...

};

}

The implementation of the hybrid algorithm is about as simple as the other hybrid algorithms:

this.dijkstraWithGla = function() {

if (moves < 15) this.greedyLookAhead();

else this.dijkstra();

}

It seemed like running GLA for 15 moves was reasonable, considering most boards are not solved in less than 30 moves, and then Dijkstra's algorithm is run to the end-of-game condition. Now we have a problem, though. We have two knobs to turn—one for the maximum number of moves to look ahead in GLA and one for the scale factor used in Dijkstra's algorithm, but only one text box in the UI. (Another knob would be the number of moves to run GLA for, but we'll just keep that at 15 to reduce the number of combinations to look at.) We'll want to separate those two knobs out by adding another text box for the scale factor to the UI. Let's call it

solver_scale_factor and add it as another parameter in the code:

function Solver() {

var that = this;

var iterations = 0;

var max_moves = 2;

var scale_factor = 25;

var time = 0;

var start_time = 0;

this.index = 0;

this.metric = nullMetric;

this.init = function() {

this.solver = $('<div>', {

id: 'solver',

class: 'control btn',

style: 'background-color:' + colors[this.index]

}).on('click', function (e) {

max_moves = $('#solver_max_moves').val();

scale_factor = $('#solver_scale_factor').val();

that.runAlgorithm();

}).appendTo('#solver_container');

// ...

$('#solver_play').on('click', function (e) {

_block_inspect_counter = 0;

_block_filter_counter = 0;

iterations = $('#solver_iterations').val();

max_moves = $('#solver_max_moves').val();

scale_factor = $('#solver_scale_factor').val();

start_time = performance.now();

time = start_time;

that.run();

});

};

// ...

function addVertices(vertices, depth, prev_control, prev_cleared) {

var stop = false;

_.each(controls, function (control) {

if (control !== prev_control && !stop) {

var removed_blocks = control.checkGameBoard(depth, markedBlockCount);

if (endOfGame()) {

doMarkedMoves();

vertices.clear();

stop = true;

} else if (removed_blocks - prev_cleared > 0) {

var markers_dup = markers.slice();

var cost = scale_factor*depth - removed_blocks;

if (removed_blocks > 590 ||

removed_blocks > 560 && vertices.length > 200000) {

cost -= (scale_factor - 5)*depth;

}

vertices.queue({markers: markers_dup,

depth: depth,

control: control,

cost: cost,

cleared: removed_blocks});

}

}

});

return vertices;

}

Inside

addVertices() we simply replace

max_moves with the new parameter

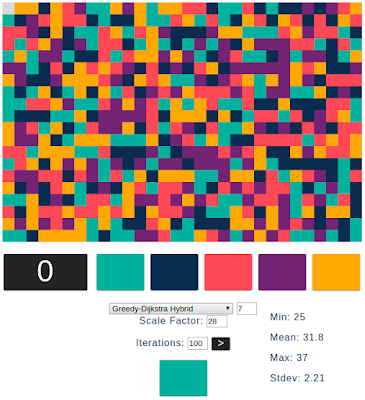

scale_factor. Now we can independently control both parameters and more easily explore variations on this hybrid algorithm. After much experimentation with the max moves in the range of 4-7 and the scale factor in the range of 25-30 using ten iterations, I found that a max moves of 7 and a scale factor of 28 performed well. Then, running for 100 iterations produced the following results.

This is quite good performance, meeting or exceeding the best algorithms in every metric except for the standard deviation as compared to Dijkstra's algorithm alone. But Dijkstra's algorithm didn't do as well on the min, mean, or max statistics, so in absolute terms the hybrid algorithm found better move sequences for nearly every board.

Before looking at the table of algorithm performance, let's add in another quick algorithm by reversing GLA and Dijkstra's algorithm to create the Dijkstra-GLA hybrid algorithm. We can add it to the algorithm list:

function Solver() {

// ...

this.init = function() {

// ...

$('#solver_type').change(function () {

switch (this.value) {

// ...

case 'dijkstra-greedy':

that.solverType = that.glaWithDijkstra;

that.metric = areaCount;

break;

default:

that.solverType = that.roundRobin;

break;

}

// ...

});

// ...

};

}

And add another simple algorithm function that calls both of the base algorithms in the hybrid algorithm:

this.glaWithDijkstra = function() {

if (moves < 5) this.dijkstra(300);

else this.greedyLookAhead();

}

Notice that the call to Dijkstra's algorithm now includes an argument of 300. This argument is the number of blocks that should be cleared before Dijkstra's algorithm stops. It's pretty easy to limit the algorithm by adding a condition to the if statement where the algorithm is stopped before it runs out of memory:

this.dijkstra = function(blocks_to_clear = 600) {

var vertices = new PriorityQueue({ comparator: function(a, b) { return a.cost - b.cost } });

vertices = addVertices(vertices, 1, null, blocks[0].cluster.blocks.length);

this.max_depth = 0;

while (vertices.length > 0) {

var vertex = vertices.dequeue();

markers = null;

markers = vertex.markers;

if (vertices.length > 250000 ||

vertex.cleared >= blocks_to_clear) {

doMarkedMoves();

vertices.clear();

} else {

vertices = addVertices(vertices, vertex.depth + 1, vertex.control, vertex.cleared);

}

vertex.markers = null;

}

this.index = null;

}

By the default parameter, all blocks are cleared when the algorithm is run so the other two calls to

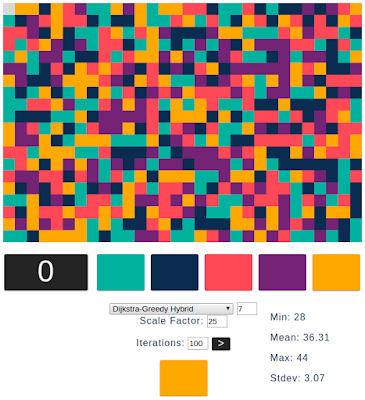

dijkstra() still work like they did before. For this run the max moves was still set at 7, but the scale factor had to be rolled back to 25, like it was for Dijkstra's algorithm alone because otherwise it would stall on some boards. The performance of this hybrid algorithm comes out surprisingly worse:

I didn't expect that just swapping the order of the two algorithms would have such a marked difference in performance. The slightly smaller scale factor doesn't account for the difference, either, because if it's set to 28, as it was in the GLA-Dijkstra algorithm, the performance is even worse. Let's look at how these two hybrid algorithms stack up to the rest of the algorithms we've looked at so far:

| Algorithm | Min | Mean | Max | Stdev |

| RR with Skipping | 37 | 46.9 | 59 | 4.1 |

| Random with Skipping | 43 | 53.1 | 64 | 4.5 |

| Greedy | 31 | 39.8 | 48 | 3.5 |

| Greedy Look-Ahead-2 | 28 | 37.0 | 45 | 3.1 |

| Greedy Look-Ahead-5 | 25 | 33.1 | 41 | 2.8 |

| Max Perimeter | 29 | 37.4 | 44 | 3.2 |

| Max Perimeter Look-Ahead-2 | 27 | 35.0 | 44 | 2.8 |

| Perimeter-Area Hybrid | 31 | 39.0 | 49 | 3.8 |

| Deep-Path | 51 | 74.8 | 104 | 9.4 |

| Path-Area Hybrid | 35 | 44.2 | 54 | 3.5 |

| Path-Area Hybrid Look-Ahead-4 | 32 | 38.7 | 45 | 2.7 |

| BFS with Greedy Look-Ahead-5 | 26 | 32.7 | 40 | 2.8 |

| DFS with Greedy Look-Ahead-5 | 25 | 34.8 | 43 | 3.9 |

| Dijkstra's Algorithm | 29 | 33.1 | 40 | 1.9 |

| GLA-Dijkstra Hybrid | 25 | 31.8 | 37 | 2.2 |

| Dijkstra-GLA Hybrid | 28 | 36.3 | 44 | 3.1 |

While the GLA-Dijkstra hybrid performs better than any other algorithm we've seen so far, and seems to combine all of the best characteristics of its constituent algorithms, Dijkstra-GLA doesn't even perform as well as Dijkstra's algorithm alone. It's more on the level of the max perimeter heuristic, which is decidedly middle-of-the-road as far as these algorithms go. Looking at the boards from a high level, this disparity makes some sense. It looks like at the beginning of a game it's more important to figure out how to remove as many blocks as possible on each move. As the game progresses and gets closer to the end, where the graph search algorithms can "see" more easily to the end of the game, their ability to find the shortest path becomes more effective, and that benefit is especially true for Dijkstra's algorithm because it's more efficient than the other graph search algorithms. Swapping Dijkstra's algorithm and GLA ends up crippling both of them.

Self-Assist

A curious idea comes out of these hybrid algorithms by thinking about the difference between Dijkstra's algorithm and GLA. GLA operates on a per move basis, meaning for each move under consideration, the algorithm looks some number of moves ahead and then commits to a move before going on to consider the next move. If we string one GLA algorithm together with another GLA, it wouldn't look any different than running GLA all the way through in one pass.

In contrast, Dijkstra's algorithm looks as far forward as it's allowed to try to find the shortest path to the end-of-game condition, and once a path is found, it does all of the moves in that path at once. If we string Dijkstra's algorithm together with another Dijkstra's algorithm, running the first one to the halfway point, it looks different than running Dijkstra's algorithm once for the entire board. The combination of the first run to the halfway point and the second run to the end may find quite a different path than a single run does. It should also run faster because the paths it needs to search are shorter by half. Let's give this idea a try by running Dijkstra's algorithm with itself. First, we add the new hybrid algorithm to the list of choices again:

function Solver() {

// ...

this.init = function() {

// ...

$('#solver_type').change(function () {

switch (this.value) {

// ...

case 'dijkstra-dijkstra':

that.solverType = that.dijkstraDijkstra;

that.metric = areaCount;

break;

default:

that.solverType = that.roundRobin;

break;

}

// ...

});

// ...

};

}

And then we can simply call Dijkstra's algorithm twice for the implementation of

dijkstraDijkstra() (it's so fun to say, isn't it?):

this.dijkstraDijkstra = function() {

if (moves < 5) this.dijkstra(300);

else {

scale_factor = 28;

this.dijkstra();

}

}

The first call to

dijkstra() specifies the number of blocks to remove to get to the halfway point. The second call changes the

scale_factor to the optimal value for when Dijkstra's algorithm is run for the later moves, as we found in the GLA-Dijkstra algorithm. The

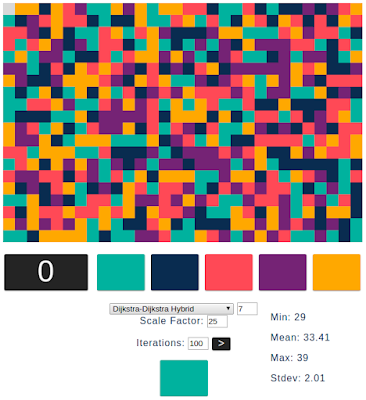

scale_factor for the first run can be set through the UI, so we can experiment a little. We could add another UI element so that two scale factors could be specified, but this should demonstrate the idea without adding that complication. With this simple addition to the algorithms, we can see how it performs:

This version of the hybrid Dijkstra's algorithm performs better than Dijkstra-GLA, but worse than GLA-Dijkstra, adding more evidence to the idea that Dijkstra's algorithm does better in the second half of the game than the first half. The first run of Dijkstra's algorithm to remove 300 blocks probably does not do as well as GLA, but the second run does do better than GLA, giving this hybrid a performance result that lands it squarely in between the other two hybrid approaches.

An Assist from the Perimeter

One more option to explore for amping up Dijkstra's algorithm is using other heuristics with the GLA part of the hybrid algorithm. We've continued to use the heuristic of maximizing blocks removed with

areaCount(), but we did look at a number of

other options for heuristics. Even though they didn't improve over the super-strong area-maximizing heuristic, the other heuristics are potentially interesting for use in paring down the move graph before running Dijkstra's algorithm. They're quite easy to add to our list of algorithms, so let's look at one of them, the

perimeterCount() heuristic for maximizing the cleared perimeter:

function Solver() {

// ...

this.init = function() {

// ...

$('#solver_type').change(function () {

switch (this.value) {

// ...

case 'max-perimeter-dijkstra':

that.solverType = that.dijkstraWithGla;

that.metric = perimeterCount;

break;

default:

that.solverType = that.roundRobin;

break;

}

// ...

});

// ...

};

}

It's so simple that all we had to do was add another choice to the algorithm list and add another case to the switch statement that uses the

dijkstraWithGla() algorithm and the

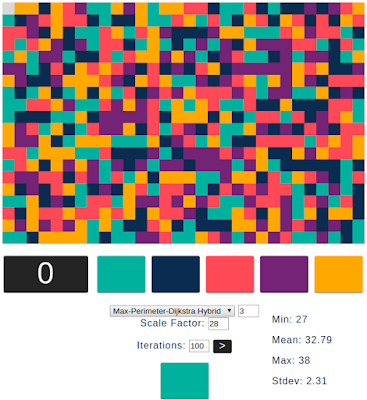

perimeterCount() heuristic. Everything else is already available and ready to go. So how does it perform?

It looks like another decent algorithm—slightly better than Dijkstra's algorithm alone, but not quite as good as GLA-Dijkstra. Here's the updated table of all the algorithms tried so far:

| Algorithm | Min | Mean | Max | Stdev |

| RR with Skipping | 37 | 46.9 | 59 | 4.1 |

| Random with Skipping | 43 | 53.1 | 64 | 4.5 |

| Greedy | 31 | 39.8 | 48 | 3.5 |

| Greedy Look-Ahead-2 | 28 | 37.0 | 45 | 3.1 |

| Greedy Look-Ahead-5 | 25 | 33.1 | 41 | 2.8 |

| Max Perimeter | 29 | 37.4 | 44 | 3.2 |

| Max Perimeter Look-Ahead-2 | 27 | 35.0 | 44 | 2.8 |

| Perimeter-Area Hybrid | 31 | 39.0 | 49 | 3.8 |

| Deep-Path | 51 | 74.8 | 104 | 9.4 |

| Path-Area Hybrid | 35 | 44.2 | 54 | 3.5 |

| Path-Area Hybrid Look-Ahead-4 | 32 | 38.7 | 45 | 2.7 |

| BFS with Greedy Look-Ahead-5 | 26 | 32.7 | 40 | 2.8 |

| DFS with Greedy Look-Ahead-5 | 25 | 34.8 | 43 | 3.9 |

| Dijkstra's Algorithm | 29 | 33.1 | 40 | 1.9 |

| GLA-Dijkstra Hybrid | 25 | 31.8 | 37 | 2.2 |

| Dijkstra-GLA Hybrid | 28 | 36.3 | 44 | 3.1 |

| Max-Perimeter-Dijkstra Hybrid | 27 | 32.8 | 38 | 2.3 |

We have built up quite a list of algorithms, with some of the best performing ones at the very end finally overcoming the surprisingly solid performance of one of the earlier algorithms, GLA-5. If we're looking only at average performance, the GLA-Dijkstra hybrid is the clear winner, with BFS+GLA-5 and Max-Perimeter-Dijkstra hybrid coming in second and third with an average of one extra move per game. However, that higher performance in number of moves comes at a cost. Those algorithms take significantly longer to search for their results than GLA-5 does. If we ordered these top four algorithms based on search speed, the order would be reversed to GLA-5, Max-Perimeter-Dijkstra hybrid, BFS+GLA-5, and GLA-Dijkstra hybrid. At the top of the leaderboard there is a clear trade-off between average performance and search time.

While we've looked at graph algorithms in general and Dijkstra's algorithm in particular fairly extensively now, one thing that was somewhat glossed over was the workings of the priority queue that is the key to making Dijkstra's algorithm work so well. Next time we'll take a closer look at this essential data structure and see how it enables Dijkstra's algorithm to quickly choose each vertex to look at next.

Article IndexPart 1: Introduction & SetupPart 2: Tooling & Round-RobinPart 3: Random & SkippingPart 4: The Greedy AlgorithmPart 5: Greedy Look AheadPart 6: Heuristics & HybridsPart 7: Breadth-First SearchPart 8: Depth-First SearchPart 9: Dijkstra's AlgorithmPart 10: Dijkstra's HybridsPart 11: Priority QueuesPart 12: Summary